Sorry - why isn't everyone using Polars now?

Like, dude, why are you still using pandas/black/poetry/(another non-rust python util)?

Sorry - why isn't everyone using Polars now?

Pandas is synonymous with data science. I used to work more in the data space, I was Head of Data Science at Waybridge and did a ton of data science in my own startup Wattson Blue. However, more recently I have been doing more software engineering; as such I have not actively been looking at what is the most performant way of playing with data (since everyone just uses pandas!).

Being on holidays this week, I decided to finally investigate different libraries and options for myself. In particular, I decided to give Polars a try, and OMG, it's not even close.

For me it's a bit like the first time I tried Ruff (in replacement of black and isort), or the first time my intern Danish showed me UV (in place of poetry.)

Like, dude, why are you still using pandas/black/poetry/(another non-rust python util)

E.g. compare loading the IMDB titles and ratings CSV file on my M1 Pro Macbook with 16GB of RAM

(feb2025) ➜ Feb2025 git:(master) ✗ uv run python imdb.py

'load'('title.basics.tsv', 'pandas')) took: 13.4196s - shape: 11mm, 9

'load'('title.ratings.tsv', 'pandas'), {}) took: 0.4610s - shape: 1mm, 3

'load'('title.basics.tsv', 'polars'), {}) took: 0.5464s - shape: 11mm, 9

'load'('title.ratings.tsv', 'polars'), {}) took: 0.0222s - shape: 1mm, 3

Even if I go small and load smaller CSVs with say 1000 rows, the results are substantially quicker - so the excuse of "but my data is not that big" does not apply I am afraid.

And the helpful errors and warnings - side note, why do I not write better errors and warnings like this?

PolarsInefficientMapWarning: Expr.map_elements is significantly slower than the native expressions API. Only use if you absolutely CANNOT implement your logic otherwise. Replace this expression...

- pl.col("numVotes").map_elements(lambda x: ...)

with this one instead:

+ pl.col("numVotes") / 1000000.0

Thanks Polars.

How about doing actual data manipulation

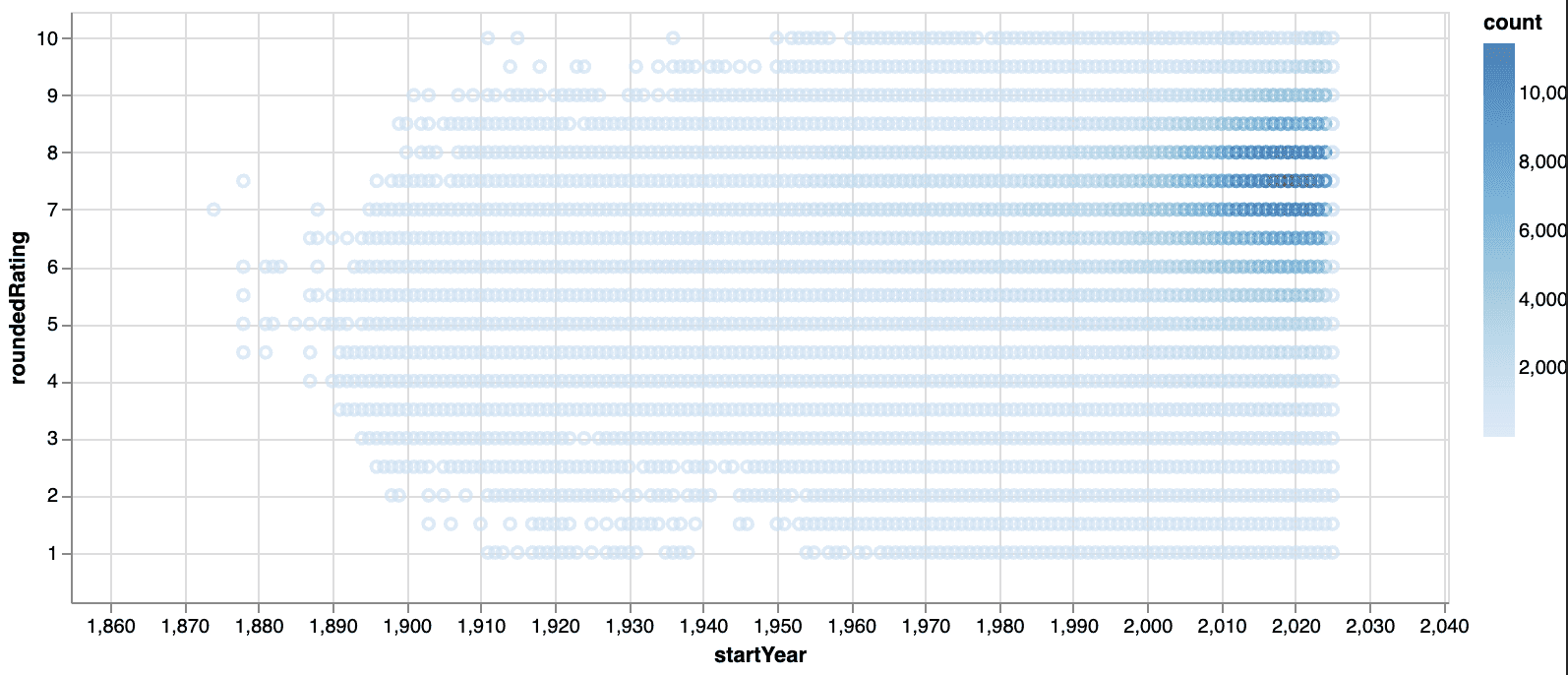

That's faster too! Here is a simple example of looking at a breakdown of movie ratings by year:

This takes 3s in pandas vs 1s in polars

```shell

2.97 s ± 92 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

1.03 s ± 30.9 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

```

This takes 3s in pandas vs 1s in polars

```shell

2.97 s ± 92 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

1.03 s ± 30.9 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

```

here is the code as an example, which I am sure could be made more efficient!

pandas_full = pd.merge(pandas_basics_df, pandas_ratings_df, on='tconst', how='outer')

pandas_full['roundedRating'] = ((pandas_full['averageRating'] * 2).round(0) / 2)

pandas_year_and_rating_df = pandas_full.groupby(['startYear', 'roundedRating'])['numVotes'].count()

vs 1s in polars

full = polars_basics_df.join(polars_ratings_df, on='tconst', how='full')

full = full.with_columns(

((pl.col('averageRating') * 2).round(0) / 2).alias('roundedRating'))

year_and_rating_df = full.group_by(['startYear', 'roundedRating']).len()

so again massive gains to be had.

(and this is not even the most efficient way of writing these, you can use lazy loading, and chain all the commands together to get an extra 20% or so gains, although you can have similar gains on pandas side too, so best keep it simple)

And the hidden advantage: The memory profile!

You might have heard this rule of thumb from the creator of pandas (see here)

pandas rule of thumb: have 5 to 10 times as much RAM as the size of your dataset

Although pandas has tried to address this issue with the new pyarrows backend, you don't have such issues in polars:

-

Zero-copy operations: Polars uses Apache Arrow under the hood, which means many operations don't need to copy data in memory. In pandas, simple operations often create new copies of your data.

-

Lazy evaluation: Polars can optimise your query plan before execution. Write something like:

df.filter(pl.col("value") > 0).groupby("category").agg(pl.col("value").sum())

And Polars will figure out the most efficient way to run it.

Polar just seems smarter in memory management - see more in this stackoverflow post

But I have a lot of code using pandas already

As described in the polars documentation here, Scikit Learn and XGBoost work out of the box with polars dataframes. Moreover, thanks to LLMs, migrating old 'pandas' code to polars should be a doddle.

Conclusion

So stop it with the excuses, learn the Polars API, switch to polars, and let's reduce the memory requirements on all those docker containers.

PS. numpy.float64 vs float

Another quick performance reminder ('of course I knew this already!' I hear you say), is that numpy arrays that contain Python native float can be substantially slower than arrays with numpy specific data types (e.g. numpy.float64).

Just compare the following methods for matrix multiplication (of a 10k x 1k matrix with itself):

2. A numpy 2D-array

3. A PyArrow-backed pandas converted to numpy using df.to_numpy(dtype='np.float64')

4. A PyArrow-backed pandas dataframe converted to numpy using df.to_numpy()

5. A polars dataframe converted to numpy using df.to_numpy()

6. A standard pandas dataframe converted to numpy using df.to_numpy()

Feb2025 git:(master) ✗ uv run python example_polars_vs_pands2.py

direct mat mul time: 0.0037 seconds

pyarrow np.float64 mat mul time: 0.0086 seconds

pyarrow float mat mul time: 24.7052 seconds

polars mat mul time: 0.0078 seconds

pandas mat mul time: 0.0039 seconds

So keep that in mind, so when converting arrays into numpy, you might be better off specifying data types, e.g.

array = df.to_numpy(dtype='np.float64')

That dtype argument could really save your bacon down the road.

I do acknowledge that polars is slower than pandas here, however, when you go to a 10kx10k matrix, the performance is much closer:

direct mat mul time: 2.5243 seconds

np.float64 mat mul time: 2.6598 seconds

[skipping the pyarrow float method as it will take too long]

polars mat mul time: 2.6275 seconds

pandas mat mul time: 2.5722 seconds

How did I end up here?

Well I tried to be clever and use pandas' PyArrow backend - however, this ends up giving Python float rather than numpy.float64 by default when converting dataframes to numpy arrays. So watch out!

PPS. parquet vs CSV

It's all too easy to save data to CSV when we want to "cache" some results to come back to later. However, there is very little reason to do that, unless you want to explore the CSV manually.

Parquet is faster on all fronts, especially if you're stuck with pandas. Plus, it gives you:

- Column-based compression for better storage efficiency

- Schema enforcement to prevent data type mishaps

- Predicate pushdown for faster querying (only read the columns you need!)

Loading from a CSV:

'load'('title.basics.tsv', 'pandas/pyarrow'), {}) took: 12.9046s - shape: 11.442166mm, 9

'load'('title.basics.tsv', 'pandas'), {}) took: 13.0133s - shape: 11.442166mm, 9

'load'('title.basics.tsv', 'polars'), {}) took: 0.3630s - shape: 11.442166mm, 9

Same data loaded from a parquet file:

'load_parquet'('title.basics.parquet', 'pandas/pyarrow'), {}) took: 0.3369s - shape: 11.442166mm, 9

'load_parquet'('title.basics.parquet', 'pandas'), {}) took: 7.4443s - shape: 11.442166mm, 9

'load_parquet'('title.basics.parquet', 'polars'), {}) took: 0.3119s - shape: 11.442166mm, 9

(and the file size is a lot smaller by default - in our case 254MB vs 987MB, unzipped!)

PPPS. What the hell is Narwhals?

So apparently Narwhals is a library that provides a set of typed APIs/tools that allow you to write functions that support both pandas and polars.

Think of it as training wheels for your Polars journey - you can gradually migrate your codebase while keeping everything working. You write your functions once with Narwhals' typed interfaces, and they'll work with both pandas and polars DataFrames. Pretty neat when you're dealing with a large existing codebase!